Letter from the editors

Kate Fernie, Gregory Markus

For this issue of EuropeanaTech Insight we focus on 3D which is rapidly becoming one of digital cultural heritage’s leading formats and is an area where many institutions are building capacity and discussing approaches and principles. High-resolution 3D digitisation of archaeological monuments and historic buildings has become widespread for research, conservation and management; and 3D digitisation of museum objects is increasingly common. The take-up of 3D technology is bringing new opportunities for the sector - institutions are offering innovative and engaging ways for people to access heritage content for education, tourism and personal enjoyment. While 3D is helping the sector to address some challenges it is also throwing up new ones - how best to embrace 3D given access to new technologies and the speed of technological change?

The recent EuropeanaTech task force on 3D content, led by Kate Fernie, explored how to increase the availability of 3D content in Europeana. In this edition of Insight, we’ve invited 4 contributors who were involved in the task force to discuss their institutions, projects and products related to 3D presentation of digital cultural heritage.

It’s our hope that with these perspectives coupled with the final task force report EuropeanaTech can shine a light and bring attention to questions related to 3D digitisation and cultural heritage through Europeana and the sector at large.

Europeana & IIIF 3D: Next Steps & Added Dimensions?

Ronald Haynes, University of Cambridge

If space is the final frontier, then we naturally turn to 3D images and models to help us explore and express that frontier. For the many aspects of space, and of our world, we have widespread options to produce good 3D models which are increasingly available to specialists and the general public alike, including on common devices. However, the many ways people scan, process, adjust, store, present and use those models create many challenges for us to readily share those models, whether in the present or in a sustainably preserved state to enable continued sharing well into the future.

The IIIF 3D Community Group (International Image Interoperability Framework 3D group) has been an enthusiastic partner to the work of the 3D content in Europeana Task Force, and is working with many 3D-active groups and developers engaged in the existing, as well as emerging efforts aiming to produce practical and interoperable 3D models, along with 2D (IIIF) image and 3D model integration. Just as the Task Force highlighted concerns about improving the policies, standardisation and means of delivery associated with 3D, the IIIF 3D Community Group is building greater engagement with partners in the academic, commercial and non-profit sectors who are keen to collaborate in advancing and developing 3D image technologies.

While IIIF has developed a set of APIs (application programming interfaces) which are derived from shared use cases and based on open web standards, it is also a community that implements those specifications in both server and client software. This combination of collaborative casework and community development has helped IIIF coalesce ways to address many of challenges associated with the coordinated use of 2D images, notably in the cultural heritage and GLAM (Galleries/Gardens, Libraries, Archives, Museums) areas, and has similarly developed institutional interoperability with Audio/Video content specifications. The efforts to extend those developments to be more inclusive of 3D materials does, however, literally add extra dimension and complexity to the mix. (See the figure below for an earlier example of a lasting standard for 3D/VR, well preserved and digitally transferred to still be usable on modern devices.)

Among the challenges facing the collaborative community work of the 3D Group, there are key considerations for preservation options (e.g. with the Digital Preservation Coalition, and CS3DP - Community Standards for 3D Data Preservation). Keeping sight of the required outcomes of sustainability and long-term usability is a helpful lens through which to focus the efforts, including regular reviews of practical approaches to scanning and capture or construction of models, and the strengths or otherwise of various file formats, whether preferable for size and transmission speed, for display clarity, for research details, and/or for effective printing. As noted in the Task Force report there are many well-known file formats, including ones perhaps especially familiar to those involved in 3D printing (e.g. STL, PLY, OBJ, the ISO-standard AMF, 3MF – e.g. see: 2020 Most Common 3D Printer File Formats).

However, for those concerned more with 3D display, especially display via the web – whether for research or clinical or general interest uses, we see more use of file formats such as glTF (GL Transmission Format, e.g. used by Sketchfab, MorphoSource, Smithsonian 3D Program and Open Access), the ISO-standard X3D (e.g. key users in the Web3D Consortium), and the medically-oriented DICOM (standard for Digital Imaging and Communications in Medicine, integrating data such as from X-ray, CT, ultrasound and MRI scans, along with patient information). (See the figure and video link below for an example of the 3D extension of the open source Universal Viewer, a standard for IIIF projects, in late development and being implemented in Morphosource for integrating 2D and 3D content.)

The variety of 3D file formats, along with the feature preferences and performance limitations which influence the selection process made for the main web-based viewer developments, is part of the inevitable process of reducing what is for many an unmanageable number of options and moving toward a more manageable set of formats. While niche or specialists file formats may remain for various purposes, improving file conversion options will continue to help in the transition to the more popular and increasingly common formats. This is the natural evolution toward practical and enduring standards, and the timing of the process will largely be down to the strength of uptake and persuasive influence by the collaborative community involved. Building on open standards for text and 2D images, and working together to ensure the seamless combination with 3D objects, should help reduce existing digital divides and increase overall accessibility, by ensuring the broadest overall data interoperability. In addition, it may be helpful to ccollaboratively clarify and develop guidance on file format selection (e.g. why certain projects choose to use glTF, X3D, DICOM, and related compression, or large-model options).

Another factor which is expected to influence the move toward more manageable 3D file formats are the developments and adoption of WebXR (Web eXtended Reality, designed for delivering VR and AR to devices, including head-mounted displays, through the Web). This move toward device independence, and more universal development cycles via Web deployment, has significant support and deployment from organisations such as Amazon, Google, and Mozilla (e.g. see: Mozilla Hubs), Google. While not currently using WebXR, a good example of some of the great potential for putting the power of AR/XR more directly into people’s hands can be seen with the Merge Cube and their partners ClassVR, with for instance some engagement experiments being trialed or planned in some museums in Texas, and at Harvard and Cambridge universities, as well as some course-related testing in Oxford University. (See the figure and video link below, for an example of some initial tests of using the AR/XR capabilities of the Merge Cube to display and interactively inspect cultural heritage models taken from Sketchfab.)

Along with the details of an object’s width and height (e.g. X and Y axis), working in 3D raises the natural question of extending this by adding depth (e.g. a Z axis). There also are questions about annotation and metadata (with examples of work in progress to be seen with Universal Viewer and Morphosource and with the open source Smithsonian Voyager viewer and the Smithsonian 3D Program). There are challenges around finding or collectively identifying suitable reference workflows (e.g. to clarify the full set of equipment, process, people and capabilities needed for 3D production - ideally in an increasingly interoperable format), and about exploring options for smaller and less well-funded institutions to access suitable workflow and production options (e.g. through better joint funding or other means of ensuring the growth of interoperability).

To adequately attempt to address these challenges we plan to continue to liaise and work with other 3D-active researchers and groups, to build the alliances and collaborative efforts. We are reviving some previously-articulated 3D technical challenges (from a 2017 IIIF meeting at the V&A museum in London), to combine with more recent developments, along with gathering user needs and stories (e.g. see initial IIIF 3D examples and use cases). We also are planning to organise further events to clarify a set of suitably-representative examples and use cases (including as part of the 2020 IIIF annual conferenceed note: this event has now been postponed). The 3D Community Group is co-chaired by Ed Silverton (Mnemoscene, Universal Viewer), Tom Flynn (Sketchfab), and Ronald Haynes (Cambridge University) and meets monthly for a conference call. Please join us next time, share your user stories, and share in this evolving area – more details can be found via: https://iiif.io/community/groups/3d

The 3D Documentation and Reuse of Data Within the Cultural Heritage Sector in Ireland

Anthony Corns, Robert Shaw; The Discovery Programme

There is a great understanding that many of our important cultural heritage and archaeological sites are vulnerable and exposed to potential threats including natural disasters, human aggression and the ever-increasing impact of climate change. Once destroyed or damaged, these important structures and objects of our past are lost forever. Over the past decade, there is a growing recognition that 3D digital documentation methods can play an important role in the preservation of cultural heritage structures and objects. The 3D digital documentation of the cultural heritage objects and structures also enabled experts such as conservation scientists, archaeologists and historians to gain an incredible level of access to very high-resolution data, which in turn allows for the identification of new features and subtleties which are not easily recognised by the naked eye.

The 3D-ICONS Project

From 2012-2015, the Discovery Programme participated in the 3D-ICONS Project: a pilot project funded under the European Commission’s ICT Policy, which established a complete pipeline for the production of 3D replicas of archaeological monuments, historic buildings and artefacts to contribute this content and the associated metadata to Europeana.

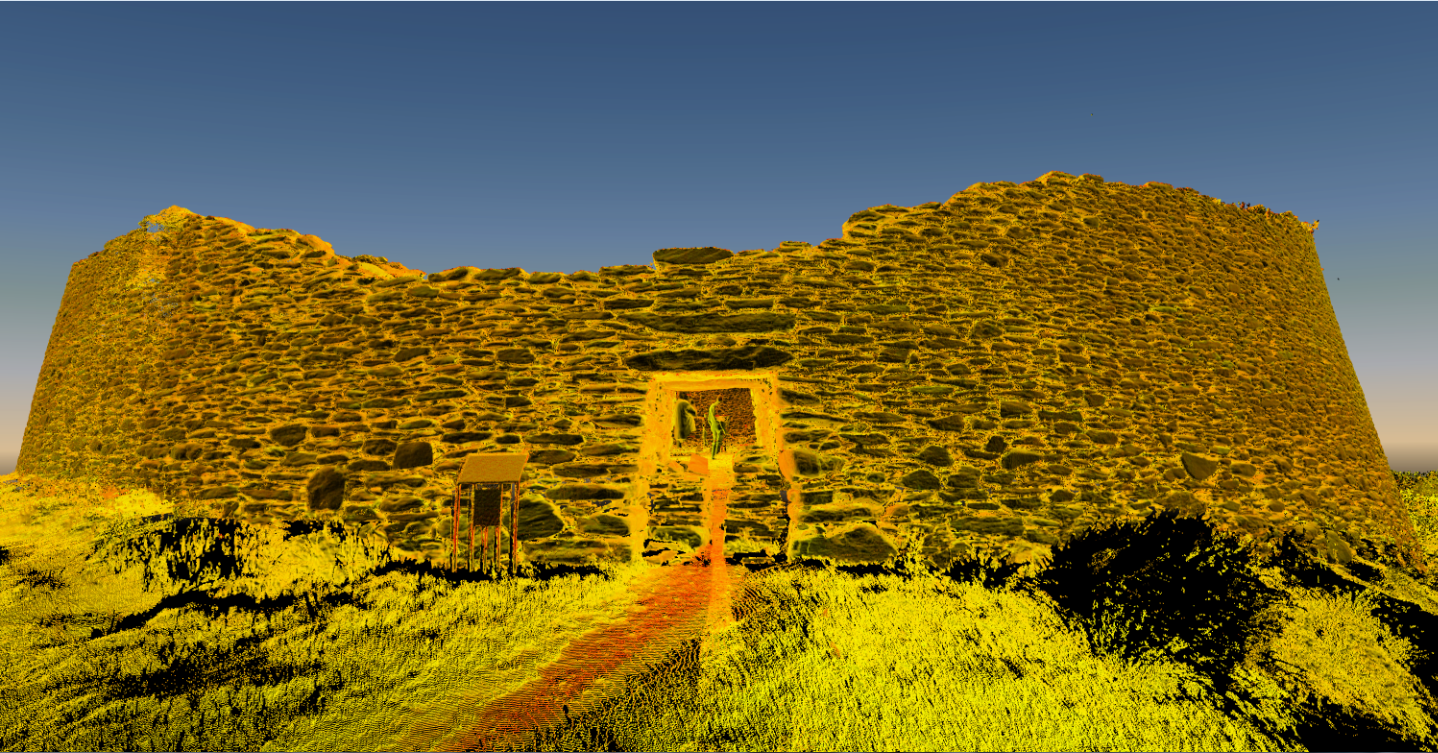

During the project, a selection of representative cultural monuments were recorded in Ireland, including the UNESCO world heritage sites of Brú na Bóinne and Skellig Michael and several others sites which are currently on the UNESCO tentative list including the medieval monastery of Clonmacnoise, the Royal site of Tara and the western stone forts of the Aran Islands. Many of these sites are National Monuments conserved and maintained by the National Monuments Service of Ireland, but also hugely popular with tourists and visitors.

The recording methodology employed to document these monuments and structure in high resolution was dependent on the scale of the site and could be divided into three categories:

1. Cultural sites defined by landscapes were documented using existing airborne laser scanning (ALS) resources, both from fixed-wing and helicopter-based systems (FLI-MAP 400).

2. Upstanding monuments and architectural buildings were surveyed using a Faro Focus 120 terrestrial laser scanner with geo-referencing provided via GPS corrections.

3. Detailed objects such as carved stones and architectural details were recorded using an Artec EVA handheld optical scanner.

Initial Results

Although three very diverse techniques were employed in data capture, the result of this digitisation was similar across the project: high-volume, high-resolution 3D data. These scientific datasets are of exceptional value to engineers and architects and they can play a major role in monitoring and conservation of cultural heritage sites. Point clouds are an increasingly common survey output and most geomatics professionals are comfortable viewing and manipulating such data in specialist software. However, to the general public, such data can be very difficult to access and understand.

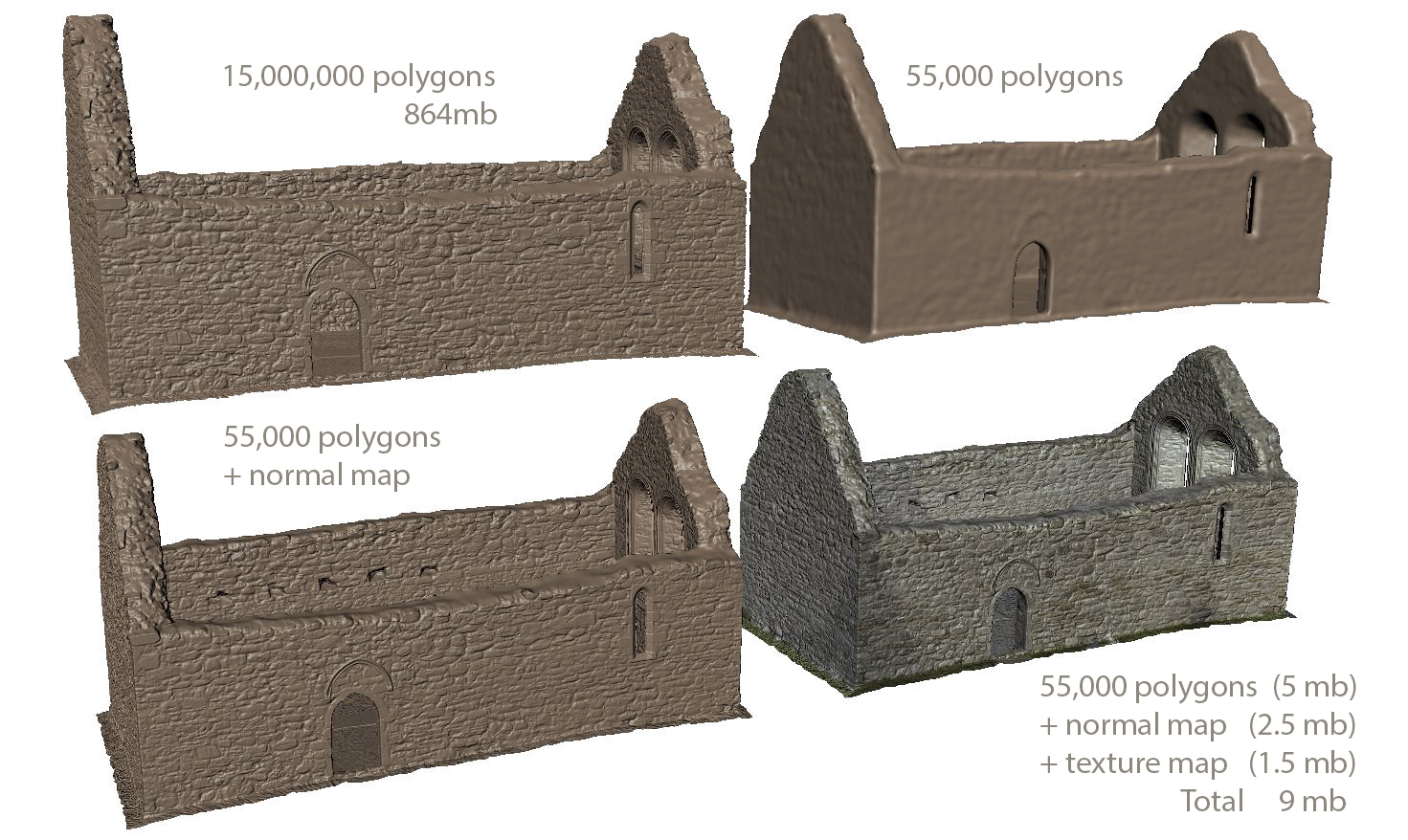

Interaction normally requires installing third-party viewers, and navigating through the point cloud; an unusual environment for the inexperienced and can be disconcerting, as solid walls can appear transparent as the space between points increases on closer inspection. Also, 3D models have relatively large file sizes, commonly over 15 GB, which can be difficult to distribute via the web and a challenge to display on a standard computer.

The solution came from applying techniques and software more commonly associated with the gaming industry and their creation of photorealistic game assets. By using a low polygon, retopologised model and employing UV texture maps (normal, ambient occlusion, colour maps) user perception of high-resolution models of cultural heritage objects was achieved but with much lower and manageable file size, with the final file size being approximately only 2% of the original. During the later phase of the project, the online 3D viewing platform Sketchfab had reached maturity and was the platform of choice for delivery of our content through the internet to a wide amount of users within both a desktop and mobile platform. Additional functionality has emerged over time including the ability to embed narrative within models and provide an enhanced virtual tour of many of the sites and monuments

Continued Use of the Data

The EU funding of the 3D-ICONS project ended in January 2015, and as such, the project and its activities came to a close. However, the outputs and legacies of the project were far from over. In addition to the 230 Irish 3D models made available online during the project, the amount of 3D content being created by the Discovery Programme has now reached 400 sites, monuments and artefacts available both through Sketch Fab and the Europeana portal. The number of users following the Discovery Programme content within Sketchfab is now 8,500 user, with the top model receiving 59,000 views and provides the Discovery Programme with a great way to engage with new audiences whose focus isn’t primarily that of cultural heritage.

We have seen reuse of data within several diverse and sectors and audiences including:

Education: Although we instinctively felt that 3D-ICONS Ireland had the potential to become an educational resource it was somewhat by chance that the opportunity arose to pursue this agenda. Through word of mouth, we became aware that the 3D-ICONS website (www.3dicons.ie) was being used as a classroom resource by primary and secondary teachers in local schools including the teaching of History of Art at Leaving Certificate level. In the first-year history class, teachers have utilised the 3D content to illustrate to their pupils the different monumental structures and historic art forms, encouraging the use of the content through using tablets and video projectors within the classroom environment. Also, teachers have used the resource to educated and orientate student before they visit one of the recorded monuments as part of a class trip.

Tourism: Ireland’s tourism attraction is strongly associated with the wealth of cultural heritage sites that appeal to visitors from around the world. The experience offered to the visitor ranges from free unguided access to controlled tours with restricted access, such as in the Brú na Bóinne World Heritage site. By repurposing the data collected within the 3D-ICONS project and adding additional content, models and visualisations the Office of Public Works (OPW), who manage many sites, has delivered a richer and increasingly interactive tourist experience. Laser scan data of a passage tomb; which is closed off from public access, has been made virtually assessable through the use of a high resolution, photorealistic rendered models which guide the public through this normally excluded space. In addition, high-resolution 3D models of megalithic art are now presented to the public in a purpose-built visitor attraction where they can view enhanced visualisations of the Neolithic artwork and understand the different artistic motifs used in the past. The ability to reuse data initially collected for conservation to enhance and valorise cultural heritage sites is a powerful proposition when considering the potential to propagate the use of 3D content across the range of 750 sites managed by the OPW.

Creative Sectors: Through the identification of 3D content through portals such as Europeana and Sketchfab, the Discovery Programme has commercially licensed several 3D datasets to a range of creative sectors for reuse. These include film production companies who have used the 3D models for the pre-production process: enabling the visualisation and providing efficiency planning at filming locations and in post-production in the creation of visual effects. As many of the 3D models have been retopologised into lightweight versions, game developers have licensed content enabling them to reuse authentic, off the shelf models within their development pipeline. Architects have also reused content where the association of Cultural heritage sites to their own sites aids in their marketing. The Discovery Programme has also had the opportunity to license data to individual artists who have repurposed 3D data within their medium of choice, creating something completely new but also authentic artworks and based on our shared cultural heritage.

By developing often serendipitous reuses of the 3D data created by the Discovery Programme, it has highlighted the lack of formal structure and support in the promotion of 3D cultural heritage content across the range of potential sectors. The benefits of recording our finite cultural heritage objects in high-resolution 3D data do not only benefit the improvement of our understanding and management of this resource by professional sectors. By ensuring that this data can be promoted and reused by a wide range of sectors and actors benefits both the wider public in enabling access to their shared heritage but also to the authentic exploitation of our cultural heritage by several industries and the potential for this to partially fund some activities within the cultural heritage sector.

3D Activities of the Europeana Common Culture Project

Kathryn Cassidy, Mashal Ahmad, Trinity College Dublin; Rónán Swan, Archaeology & Heritage, Transport Infrastructure Ireland; Cosmina Berta, DnB

Introduction.

3D Data is a hot topic in the world of Cultural Heritage and a number of ongoing projects are developing guidelines and examples of how to aggregate 3D content to Europeana. The Europeana Common Culture project 3D activity, led by Trinity College Dublin, has been working with the 3D Content in Europeana Task force on best practice recommendations for 3D content.

We have been working with Transport Infrastructure Ireland as an industry partner, who have provided a selection of the 3D cultural heritage data collected in the course of their work.

Using 3D content

3D content is complex, and how you publish it will depend on the expected use cases.

Direct download of a 3D model may be the preferred option for expert users and researchers. 3D printing is another use case for 3D content, typically requiring the download of a .stl or .obj file version of the model.

Downloading a model does not, however, provide a very satisfactory experience for the majority of users without access to specialised software. For these users, a browser-based interactive visualisation is much more useful.

As VR technology becomes more widespread and affordable, more and more users will also want to be able to view 3D content using a VR headset in addition to browser and download options.

As part of our 3D work, we wanted to look at options to enable each of these use cases for the 3D content aggregated to Europeana.

Preparing 3D Data for Aggregation

As a National Aggregator, we are not involved in creating 3D data, rather we offer storage, preservation, discovery on a national portal, and aggregation to Europeana. This means that we have little control over the data capture process and we work with a wide range of 3D formats.

Our aim was to create a semi-automated data processing pipeline which would rely primarily on open source software such as CloudCompare, Meshlab, Blender or programming-based solutions such as open3D.

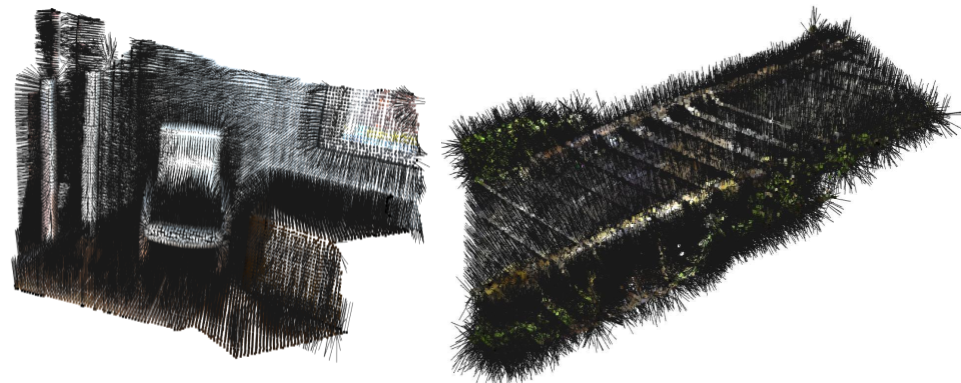

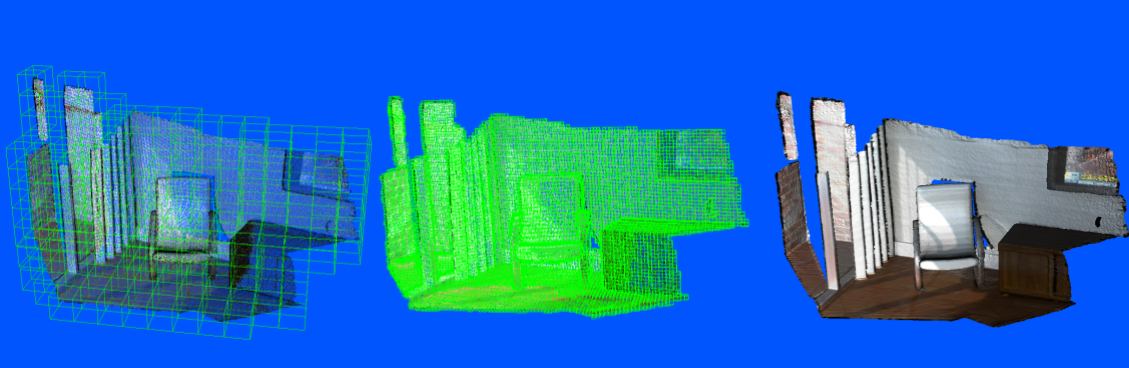

We are working with a dataset provided by Transport Infrastructure Ireland, which consists of Terrestrial Laser Scans of Faro Focus S120 and Leica TS15. Some of the images in this article use the Dysert Bridge model from that dataset. These 3D files come in a range of industry specific point cloud formats with data points ranging from 3 million to 296 million. A point cloud file is essentially a set of points in 3-dimensional space, which when viewed together show an object or landscape.

Although such large files may be useful for research, 3D printing, and other specialised use cases, they are not ideal for web delivery or in-browser rendering. In order to generate more lightweight 3D models, we adopted a surface reconstruction method which removes some detail and specifies only the surface of interest.

Pre-processing:

In order to construct a smooth surface, it is necessary to get rid of noise, outlier points, misalignments and gaps in the data using a variety of filters. Once this is done, a process called data decimation is used to downsample the data, reducing the number of points, while keeping original properties such as colours, normals, etc. intact. Data decimation uses procedures such as voxel grid or octree to average out similar points, simplifying the model.

Normal Computation

Normal computation is an important property of geometric surfaces and is required to implement the correct light source to generate shading and visual effects. In the case of a smooth surface, a normal is defined to be perpendicular to the point's tangent surface and hence represents a localised surface approximation for that specific given point.

Normals need to be oriented to make them consistent along a particular direction. An orientation method such as Minimum spanning tree (MST) or orienting normals along the main axes (e.g. x,y,z) gives us an approximation of the surface of the model.

For smooth normal computation, we can specify the local surface as a plane, triangulation or quadratic depending on whether the point clouds have sharp edges, noise or curvy surfaces or specify the nearest neighbour extraction using octree.

Surface Reconstruction:

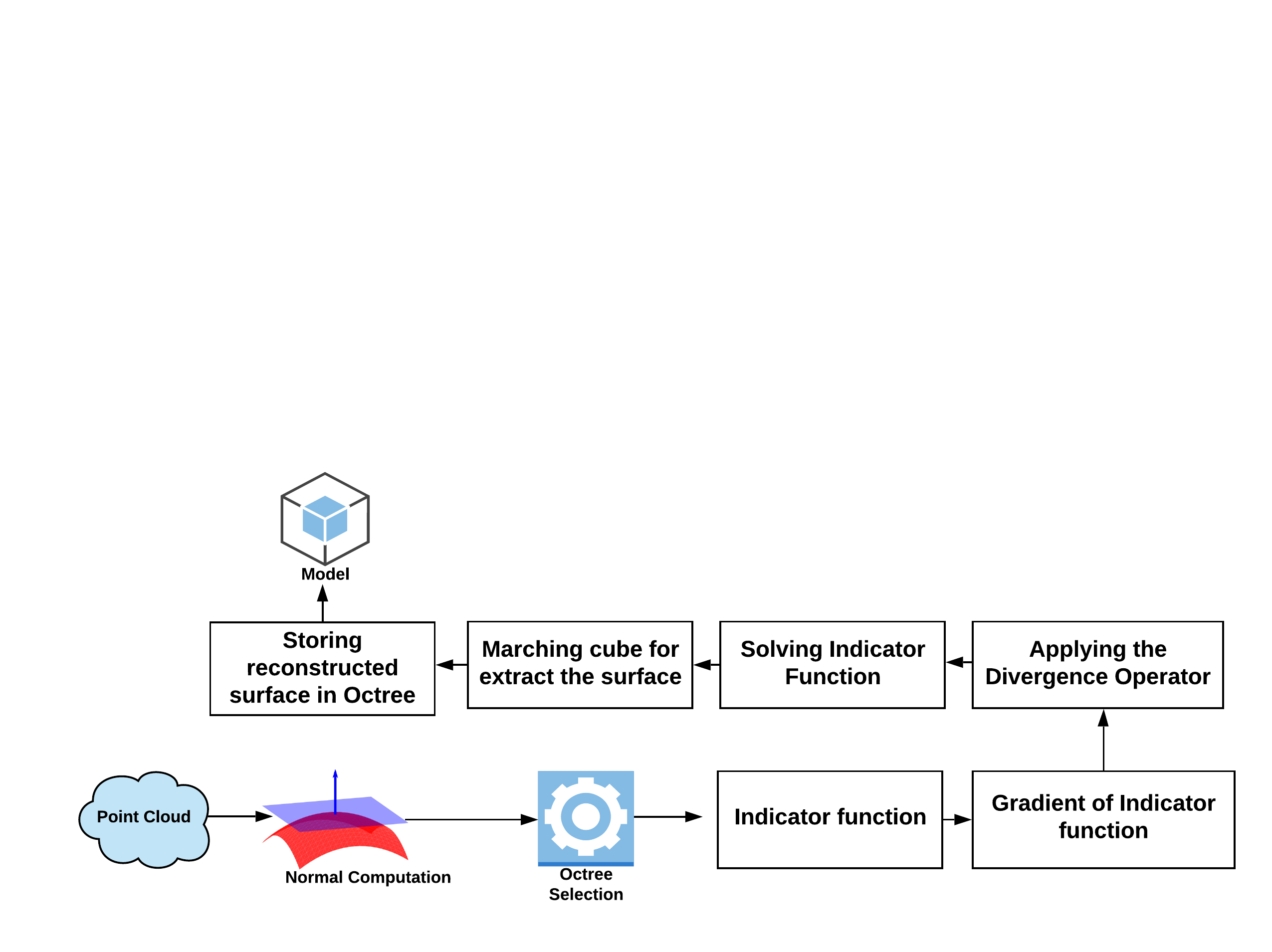

Surface reconstruction is the generation of a lightweight watertight surface for dense point clouds. We opted for the well-known Poisson surface reconstruction algorithm, an implicit surface representation technique that creates a 3D mesh from a point cloud.

Steps involved in the algorithms are displayed through the flow diagram.

Quality and computation time are affected by the number of parameters in the Poisson reconstruction e.g. Octree depth, samples per node , Solver divide and surface offsetting.

Fixing the Meshes: After the surface reconstruction of the point clouds the created mesh may have extra surfaces at boundary areas or large gaps that are fixed using meshlab or blender.

Exporting the 3D Model: A wide range of 3D model formats are available but many are proprietary formats which are less interoperable. We opted for open and neutral formats such as OBJ and STL.

Aggregating 3D objects using EDM

The Share3D project’s Dashboard tool serves as a good example of what constitutes rich EDM descriptive metadata for 3D content, while the 3DIcons project’s Report on 3D Publication Formats Suitable for Europeana gives good guidance on file formats. There is little guidance at present, however, on how to map 3D content to the Europeana Publishing Framework.

The Europeana Publishing Framework identifies four different content tiers, each one with an associated scenario:

- Tier 1: Europeana Collections as a search engine

- Tier 2: Europeana Collections as a showcase

- Tier 3: Europeana Collections as a distribution platform

- Tier 4: Europeana Collections as a free re-use platform

To achieve Tier 1 the specific recommendations for supported content types typically specify that at least a thumbnail image and a link to the content file or to a webpage where the file can be viewed be provided.

For Tiers 2-4 it is recommended that a higher quality version of the content file or an embeddable object is provided along with the thumbnail image. A link to a webpage where the file can be viewed may also be provided.

To achieve this for 3D content, the EDM record might include:

- A thumbnail still image preview in the edm:Object field

- A link to the 3D model file or to an embeddable object (e.g. Sketchfab embed code) in edm:isShownBy

- A link to a webpage where the object can also be viewed in edm:isShownAt

Careful consideration should be given to whether a direct link to a 3D model or an embed code is the best option. If your models are likely to be of more interest to researchers, then the downloadable 3D model file may be the most appropriate option. This is unlikely to allow the model to be visualised within the Europeana platform however.

In order to appeal to the widest audience, an embed code is likely to be the best choice. Optionally, an additional downloadable 3D model file link might be provided in an edm:hasView element.

The 3DIcons project recommended 3D PDF, HTML5/WebGL, Unity3D/UnReal and pseudo-3D as preferred content types. In fact, the most widely used 3D format in Europeana is 3DPDF. Typically, these datasets include a direct link to the 3DPDF file in edm:isShownBy. Unfortunately, Adobe is now dropping support for the creation of 3DPDF. Although still viewable via some PDF viewers, many viewers do not support the 3DPDF format, and there is no wide support for web-based viewers. The format also does not allow the 3D source object to be easily extracted for reuse which some other viewers do allow. Thus, despite the large numbers of Europeana 3DPDF objects, the Common Culture 3D activity is not recommending 3DPDF, instead focusing on WebGL viewers.

Sketchfab is one of the most popular WebGL viewers in use in Europeana Collections. It also benefits from the support of other projects such as Share3D which has developed a dashboard which integrates with Sketchfab and allows the creation of rich descriptive metadata and aggregation to Europeana via CARARE.

An obvious advantage of online solutions such as sketchfab is that the content provider or aggregator doesn’t need any storage capacity. Sketchfab also has a built-in option to view a model with a VR headset. However, these are proprietary solutions which often involve costs depending on the size and number of objects published. There could also be issues with the longevity of the service or the intellectual property rights of 3D content shared on such platforms.

Self-hosted viewers such as 3DHOP or the Simthsonian’s Voyager require storage capacity and server hosting, but as open source solutions they may be more sustainable in the long term. We are currently looking into both Sketchfab and open source self-hosted options.

MorphoSource: Creating a 3D web repository capable of archiving complex workflows and providing novel viewing experiences

Doug M. Boyer, Director of MorphoSource / Associate Professor of Evolutionary Anthropology, Julie M. Winchester, Lead Developer & Product Manager of MorphoSource, Department of Evolutionary Anthropology, Duke University; Edward Silverton, Mnemoscene

Introduction

MorphoSourceTM is a public web repository where subject experts, collection curators, and the public can find, download, upload, and manage three dimensional (3D) (and 2D) media representing physical objects, primarily biological specimens. Soon the site will support cultural heritage and other object types as well. Compared to other online resources, the strength of MorphoSource is in its support for the specific, emerging needs of users for whom 3D imagery is data and evidence for research and education. Specifically, it emphasizes paradata including support for 1) describing the sometimes complex acquisition and modification narratives for 3D media, 2) deep nuanced metadata for 3D and 2D imaging modalities, and 3) efficient tracking and managing of media by collections organizations. MorphoSource is the largest resource for biological 3D data with regard to data depth (~100,000 datasets) and diversity (30,000 specimens and 12,200 species). As a result, it has extensive adoption by its research community (~11,000 active users, 200,000 downloads, and 3.7 million views) and growing impacts for scholarship (~550 citing publications) as well as education. Once upgraded with cultural heritage object support (est. Fall 2020), the repository will run on a Ruby on Rails web application. The MorphoSource Rails application is a specialized data management platform dedicated to providing deep support to independently operated collections organizations for archiving, curating, and managing access to 3D and 2D media representing, based on, or related to physical objects and data.

Challenges and Benefits of 3D Data Representing Physical Objects

3D digitization of physical objects provides an enormous opportunity to accelerate advances in and access to knowledge related to natural and cultural history, biodiversity, geology, geography and culture. Compared with access to a physical object, access to a 3D digital object can allow a user to examine more quantitative (and often otherwise inaccessible) information related to shape, materials, or internal structure. Additionally, 3D digital objects provide unparalleled accessibility, because users are not hindered by geographic distance or the number of other individuals and/or machines simultaneously examining that same virtual object.

For academic use cases, there are also significant challenges associated with purely virtual examination of physical objects. Principally, there is a risk that the virtual object is not a reliable representation of the physical object, or that the virtual object becomes inaccessible or misrepresented over the long term due to data corruption, lack of context, or file format obsolescence. This risk must be addressed and minimized as much as possible. There are several key components to mitigating such risks: 1) granular documentation of 3D image creation and modification (i.e., paradata); 2) use of open and supported file formats; and 3) use of standardized data models and metadata terms by repositories as much as possible.

Parenthetically, we note that these challenges are much less of a problem for 3D imagery that is not intended to accurately represent a physical object of natural historical or cultural relevance (e.g., a 3D painting of a toy unicorn), or in cases where the imagery is meant to summarize interpretations based on physical objects in order to express academic concepts (e.g., a 3D animation of a beating heart). In other words, we are focused on issues associated with 3D imagery that are meant, in some sense, as data for analysis or evidence of something that exists/existed in the real world. MorphoSource Rails as a repository platform has been created specifically to address the particular challenges associated with these 3D data, which differentiates it from other available web 3D resources.

Media Preview Of 3D Models and Volumes

Though much of the rest of this article will be devoted to the use of MorphoSource Rails to preserve provenance and the details of image acquisition narratives, equally important is providing potential users with tools to quickly and easily preview and even work with the 3D data in the repository. When displayed next to essential metadata on image data format, dimensions, and resolution, these previews dramatically facilitate users’ ability to determine whether a particular dataset meets their individual needs. By default, Hyrax supports the IIIF-compliant Universal Viewer, which provides web-embeddable media previews for a diversity of 2D media and document formats. While the Universal Viewer is an excellent open framework, the collective developing it has not yet had the opportunity to build out strong 3D support. To provide deeper support for 3D, MorphoSource has collaborated with Mnemoscene and created Aleph, a web viewer for 3D models and volumes that can be used by itself or natively as a Universal Viewer extension. This viewer leverages open source frameworks for robust 3D display, including Three.js, A-Frame, and AMI Medical Imaging Toolkit, and has been built to be extendible for future AR/VR applications.

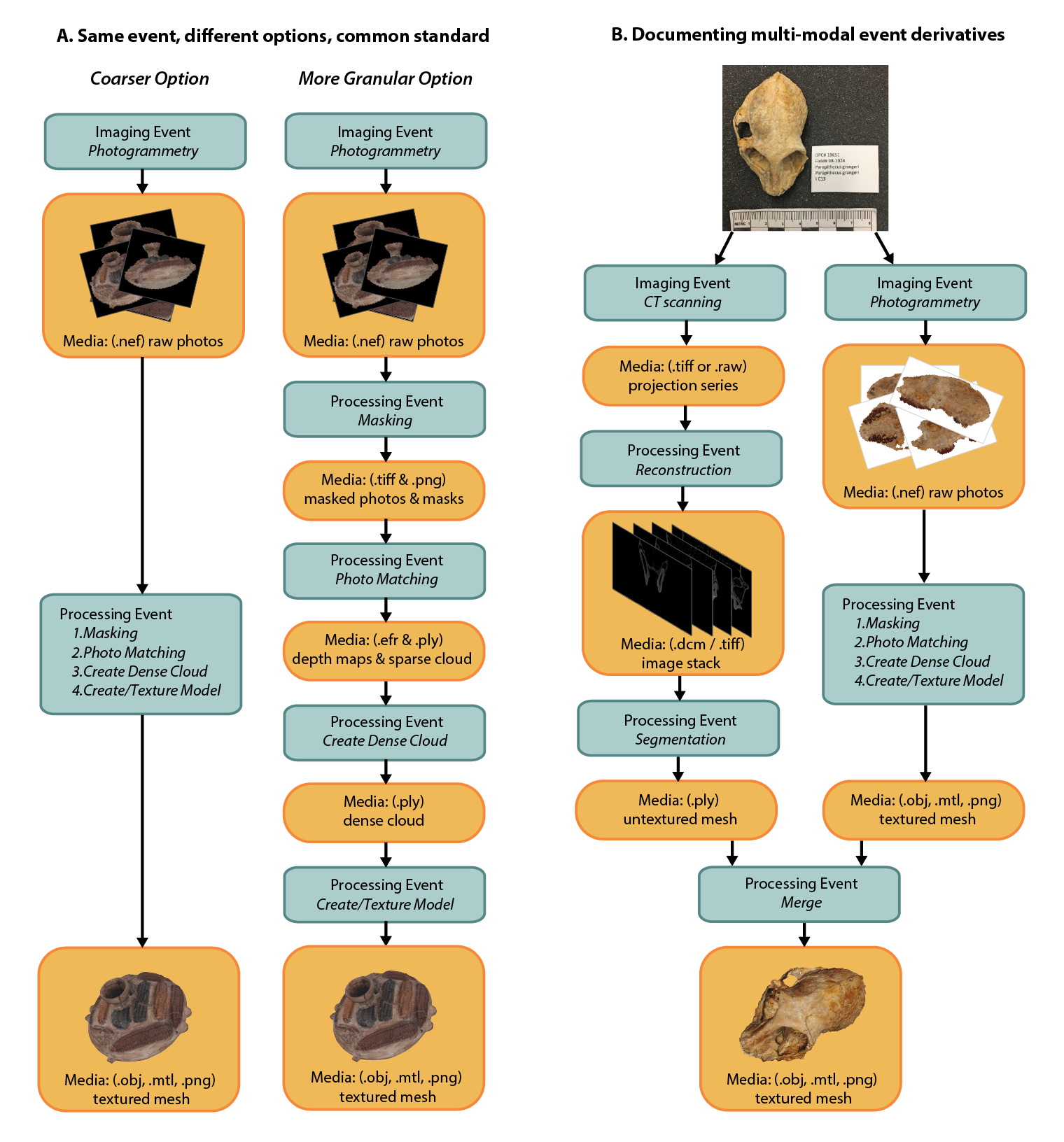

Representing Complex Image Acquisition Paradata

The most difficult problem we have seen data producers, repositories, and community-standards groups struggle with is how to record image acquisition paradata in a granular standardized way while also accounting for the diversity and complexity of data creation workflows. MorphoSource Rails addresses this challenge with a data model (Figure 2) that represents media, physical objects, imaging devices, and other things as a network of “entity” records that can be related to each other using “event” records (i.e., “objects” and “events” from the PREMIS Data Dictionary). Each record, whether representing an entity or an event, has specialized metadata for describing that object or action. Media files are related to both the record of the “physical object” they represent and the record of the “device” or tool used to image that object by an “imaging event” record. A complex series of derivative media can then be represented as a long chain of parent and child media entities connected by processing events.

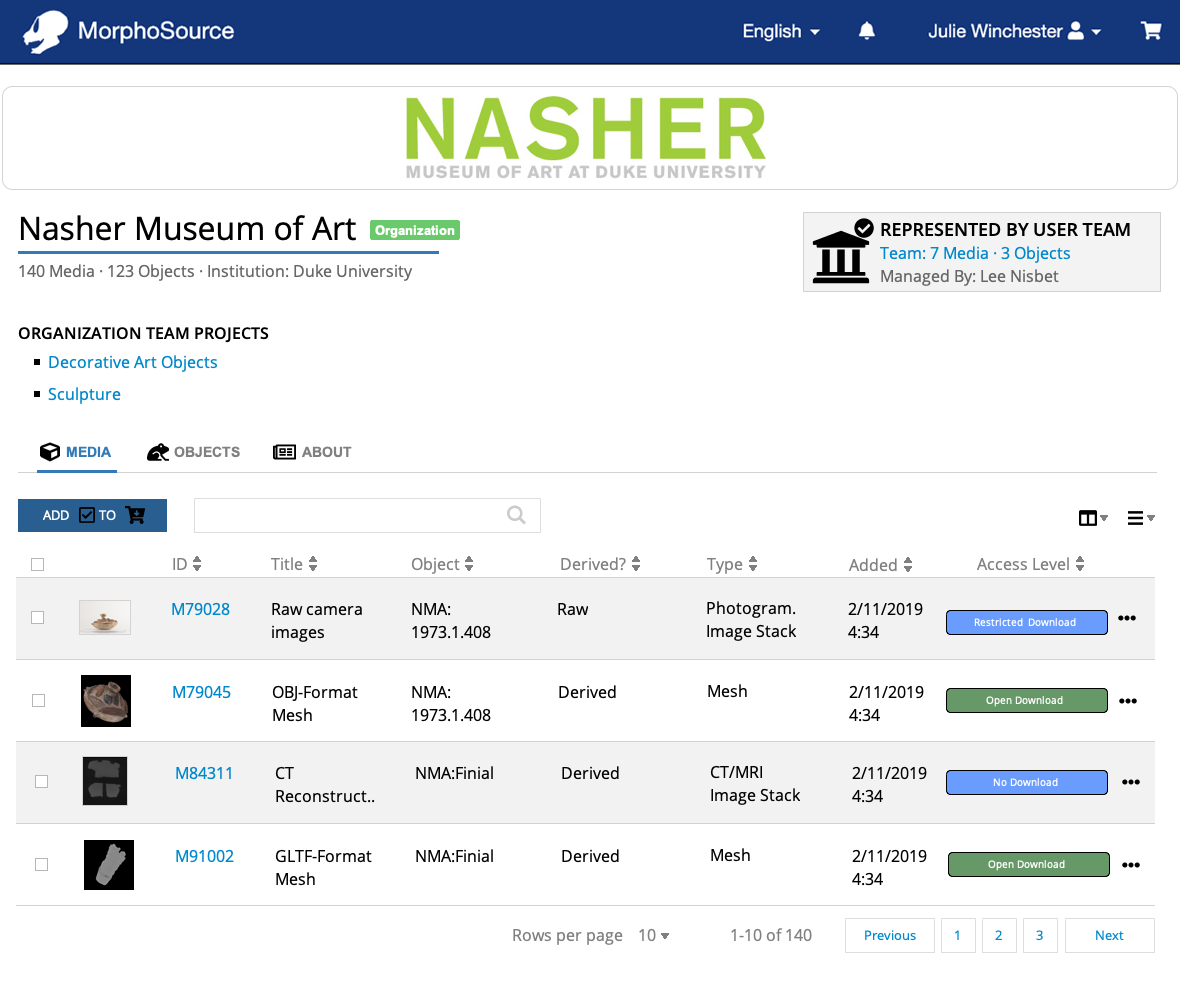

Agents or users are not tied directly to data creation workflows: data are contributed through a user or team MorphoSource Rails account (Figure 2, bottom row and management icons throughout). Ownership and management rights flow from and can be modified by users and teams. As part of this management functionality, users and teams determine how and by whom data are accessed or modified. Organizational teams are special certified user groups that officially represent a collection or imaging facility organization. They have default management status for all specimens associated with their organization and view access to all media associated with specimens they manage. Records associated with an imaging event and derivative chain can be owned by multiple users and teams in an ad hoc way. Any user can create projects containing media for the purpose of tagging resources used in lesson plans or research articles, for example. Users can create projects that give special access permissions for media they own to any number of other individual users.

This data model maps to virtually any image creation scenario and supports the long and complex image acquisition workflows and data relationships required for scientific and academic use cases that treat imagery as data (Figure 3). Our submission form anticipates that raw data may often be unavailable, and our processing event structure anticipates that some users will prefer to use one event to specify multiple sequential processing activities, while others might specify the same workflow through multiple events with fewer activities in order to preserve a number of intermediate media files (Figure 3A). In addition, during data submission, users are required to choose an imaging modality from a controlled vocabulary, which allows preloading of modality-specific metadata profiles and restricts allowable formats for media upload.

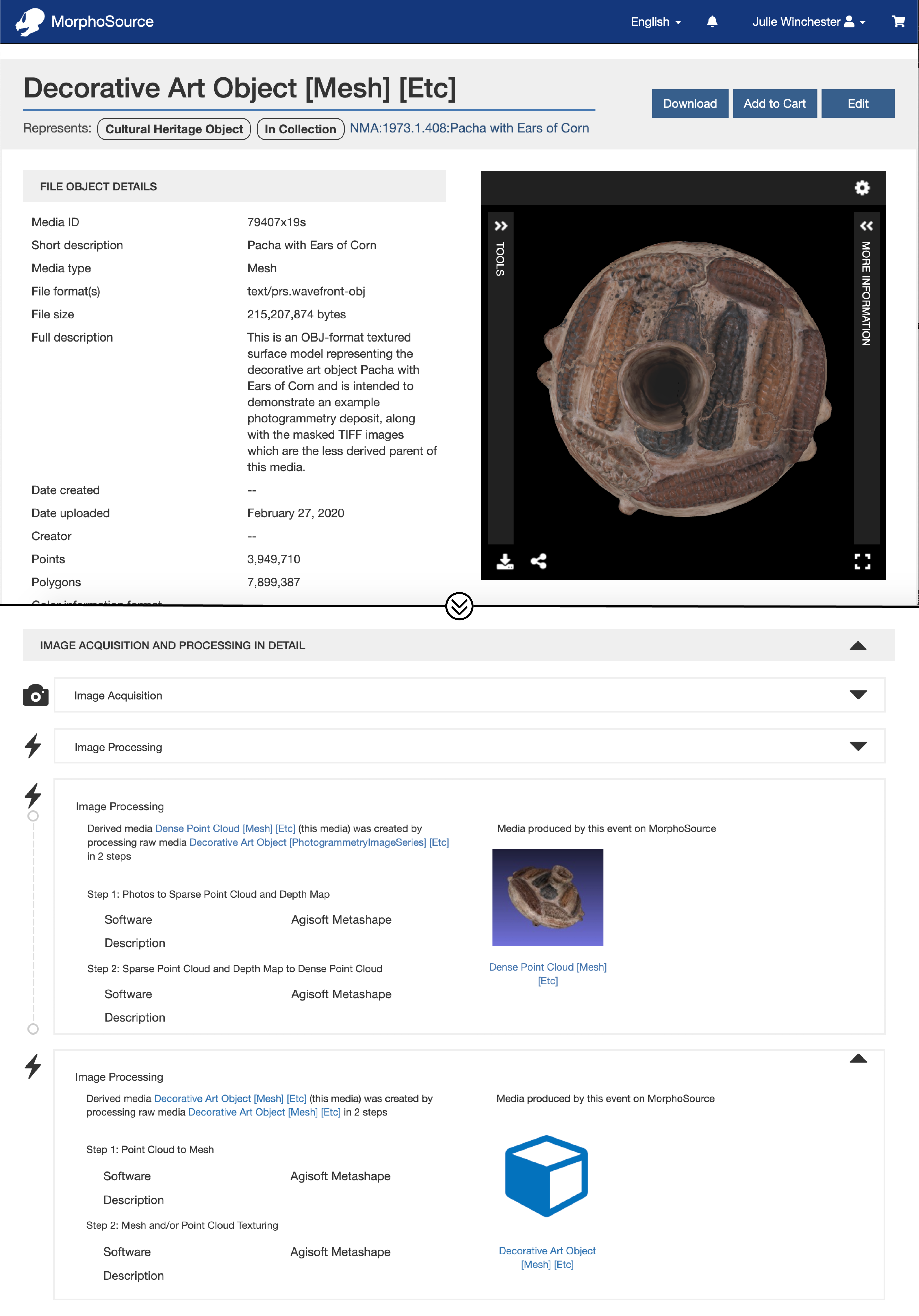

An Example Photogrammetry Deposit

To demonstrate the flexibility of this approach, we present an example deposit of a cultural heritage object imaged with photogrammetry (Figure 4). The cultural heritage object here is Pacha with ears of corn, an Inca vessel dated to 1438 – 1532 in the collection of the Nasher Museum of Art at Duke University and imaged by the Wired Lab for Digital Art History & Visual Culture, Duke University. Figure 4 below is a screenshot of how this model is represented on MorphoSource Rails. The upper portion shows the initial top page view of this media object. A short description of the media, including its status as a cultural heritage object and the physical object which it represents, is indicated in the header banner. Below this, the media can be previewed and interacted with to the right. To the left, primary media metadata is provided, including title, creator, file format, and relevant data resolution properties such as the number of points and polygons for this 3D model. Though it is not shown, immediately below this page view a user would find metadata relating to licensing, citation, ownership, and other similar information, as well as other media related to the current media.

The lower portion of Figure 4 shows the MorphoSource Rails media show page scrolled down to the section Image Acquisition and Processing in Detail. In this section, the complex image acquisition paradata that led to the creation of the current media are displayed. The first two collapsed events – Image Acquisition and the first Image processing – describe the creation of a collection of raw-format camera images and a derived collection of masked TIFF-format images generated from the raw-format images. The first expanded processing event visible here describes the creation of a dense point cloud media file from the masked images. The final processing event describes the two processing steps used to generate the current media, a textured surface model, from the dense point cloud. Each of these event sections contain a link to the media that was generated from that event.

Conclusions

An important priority for MorphoSource Rails is to provide extensive and customizable support for collections organizations (Figure 6). Collections with little institutional infrastructural support will benefit most from utilizing the MorphoSource web repository and cloud storage. Larger institutions with greater means to produce and host large numbers of datasets and international institutions may prefer other options. Specifically, we expect larger institutions to be interested in leveraging the tools MorphoSource has built for documenting paradata, generating interactive previews, and managing downstream use of data, while still utilizing their own storage infrastructure. We are very interested in beginning collaborations to attempt federations of locally managed MorphoSource instances. Please reach out to us!

Acknowledgements

Skip Down Icon from Figure 4 created by Marek Polakovic from the Noun Project and used here under a CC-BY license. We gratefully acknowledge the support of the Nasher Museum of Art at Duke University and the Wired Lab for Digital Art History & Visual Culture at Duke University for allowing us to use the example cultural heritage object included here. We also much appreciate the Duke Fossil Primate Center, Matt Borths, and Steven Heritage for both the creation of the textured primate skull model in Figure 3 and permission to use images of it here.