Our learnings

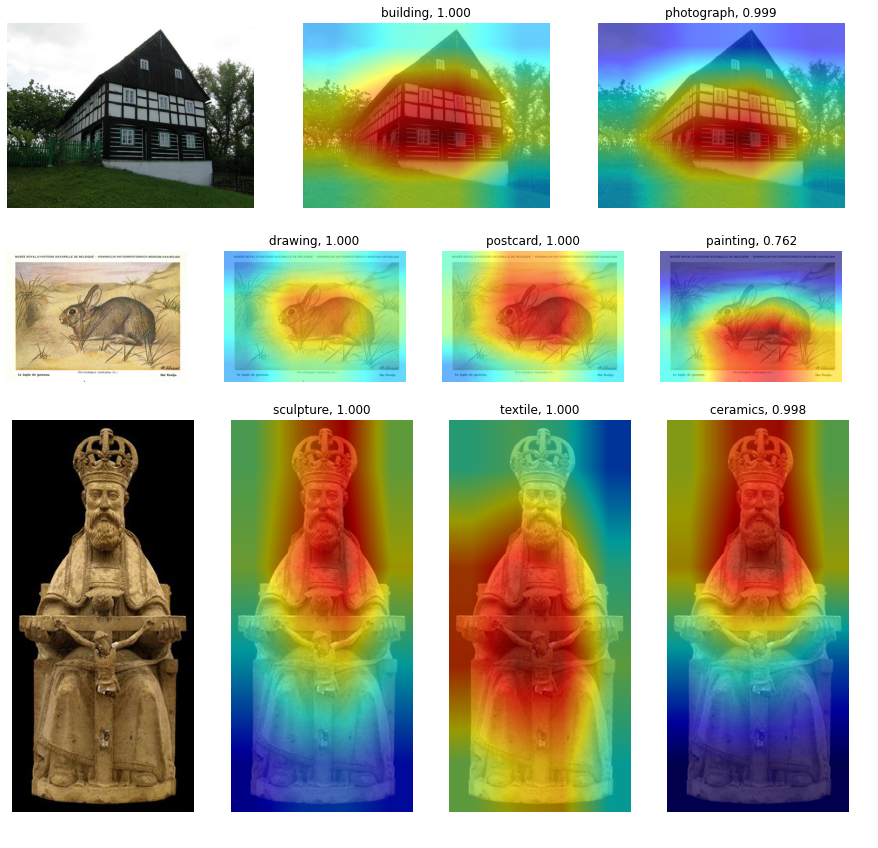

From our experiments we have concluded that the model is able to correctly identify multiple relevant labels for the given images. The multilabel approach is more helpful than using single labels since it can apply several labels to each image with high confidence.

Despite the interesting results, the performance of the resulting model is far from perfect, and we can attribute this to several factors. The most important is the relatively low quality of the dataset gathered. We found out that many of the images retrieved don’t have correct metadata.

Additionally, most of the data used for training was provided by the Norwegian DigitalMuseum. This means that the training data does not reflect the entire data distribution at Europeana, causing the model to be biased towards the data it has been trained with. The biases of the training data will translate in a lack of generalisation for the rest of images from Europeana. In simple terms, the model will perform well on images similar to the ones contained in the training dataset, but it will fail if the images are too different.

In general, our training data is good enough for the model to learn some basic patterns. The model did well despite the challenging setting of using data with incorrect labels. However, the quality of previous enrichments is not suitable for using them as training data for building a model to enrich our collections. A solution to this is to create a higher quality training dataset, to ensure that our model is presented with the right labels.

Future work: crowdsourcing

After training and evaluating the multilabel classification model, we have concluded that assigning multiple labels to the images from our collection is more suitable than enriching them with a single label.

We are considering expanding the vocabulary by including other terms relevant to cultural heritage. More importantly, we are planning to review and expand the training dataset, with the goal of identifying and correcting possible biases and errors. We would like to ensure that our model is presented with the right labels, which is expected to perform significantly better than when trained with 'noisy' labels. We have launched a crowdsourcing campaign for building a high-quality annotated dataset with Zooniverse, and we welcome contributions from our community.

You can follow our work in this Github repository. We also invite you to experiment with this Colab notebook, where you can make your own queries to the Europeana Search API and apply the multilabel classification model. Feel free to contact us at rd@europeana.eu if you have any questions or ideas!