Bulk downloading media and metadata using the Europeana API

Whilst many people write apps and services based on realtime access to the API, a frequent request is to get bulk downloads of specific subsets of items, including both their metadata and their associated media files. Because of the power of the API, and the rich metadata from providers, this is actually very simple for anyone with a little coding knowledge.

Let's take a dataset such as these lovely stereoviews by Gaudin from the Rijksmuseum. The link to the Europeana Collections site is

http://www.europeana.eu/portal/search?f[IMAGE_ASPECTRATIO][]=Landscape&f[IMAGE_COLOUR][]=true&f[IMAGE_SIZE][]=extra_large&f[PROVIDER][]=Rijksmuseum&f[REUSABILITY][]=open&f[TYPE][]=IMAGE&page=1&q=photograph&qf[]=gaudin

which translates as the API call

http://www.europeana.eu/api/v2/search.json?wskey=xxxxxx&query=photograph&qf=gaudin&qf=IMAGE_ASPECTRATIO%3ALandscape&qf=IMAGE_COLOUR%3Atrue&qf=IMAGE_SIZE%3Aextra_large&qf=PROVIDER%3ARijksmuseum&reusability=open&qf=TYPE%3AIMAGE

You'll note that this has already been filtered to openly resusable items with 'extra large' (>4megapixel), directly available image files (58 at the time of writing).

The next thing we want to do is maximise the number of records using &rows=100 and set &profile=rich which will ensure that we get the link to the media file in the response. Finally, as we don't always know the full number of records but we want to download everything, we'll choose &cursor=* which will give us cursor based pagination - regular pagination would restrict us to 1,000 results (see the pagination documentation for details). So our full query becomes:

http://www.europeana.eu/api/v2/search.json?wskey=xxxxxx&query=photograph&qf=gaudin&qf=IMAGE_ASPECTRATIO%3ALandscape&qf=IMAGE_COLOUR%3Atrue&qf=IMAGE_SIZE%3Aextra_large&qf=PROVIDER%3ARijksmuseum&reusability=open&qf=TYPE%3AIMAGE&start=1&rows=100&profile=rich&cursor=*

Now we have a base for our query, we can start coding. The logic is to set some general variables, make an API call, loop through the records in the response, extracting the chosen metadata and saving it to a csv file whilst also fetching and saving the images to a local directory. We then repeat the calls using the nextCursor value taken from the previous response, until all the results have been found (or the manual limit reached, if set).

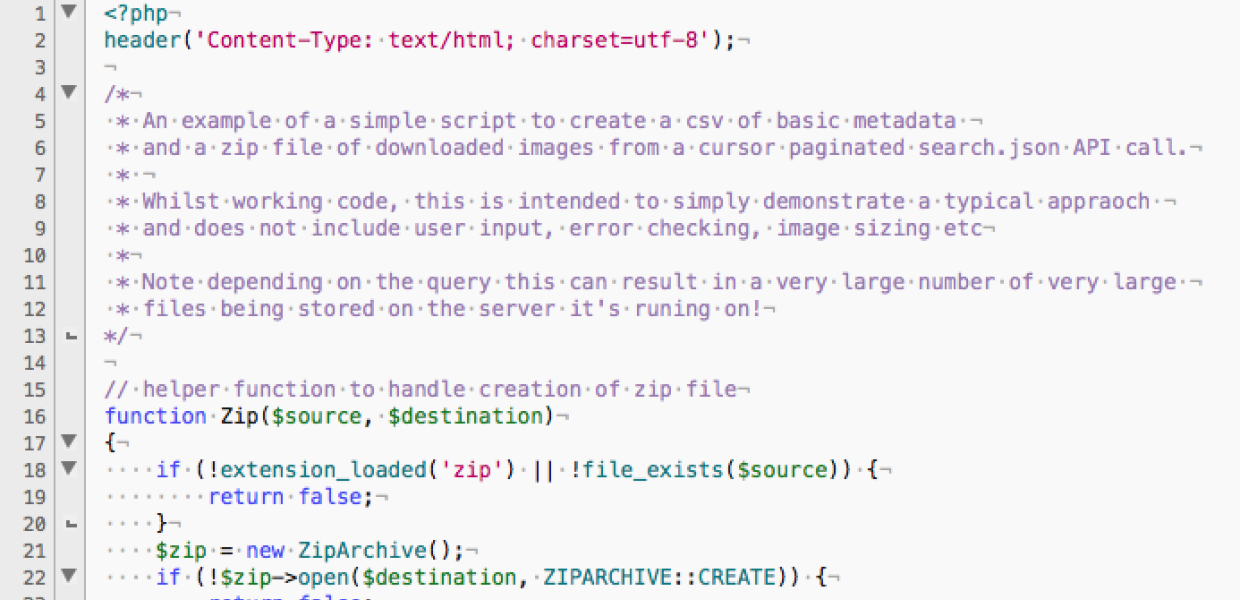

Of course you can do this in your language of choice, but a simple implementation in PHP is given below, and a slightly enhanced version which also zips up the resulting files can be found on GitHub under an open license.

<?php

header('Content-Type: text/html; charset=utf-8');/*

// create a directory to store results in, based on timestamp

* An example of a simple script to create a csv of basic metadata

* and a zip file of downloaded images from a cursor paginated search.json API call.

*

* Whilst working code, this is intended to simply demonstrate a typical appraoch

* and does not include user input, error checking, image sizing etc

*

* Note depending on the query this can result in a very large number of very large

* files being stored on the server it's runing on!

*/

$directory = 'downloads/'.time().'/';

mkdir($directory, 0777, true);// set some starting variables

$apikey='api2demo';

$fetchedrecords=0; // how many records have been fetched so far

$rows=18; // the number of rows from each API response - max 100

$records=''; // we'll set this from the api response

$limit= 100; // change this to limit results rather than get full dataset

$cursor='*'; // starting cursor for pagination of full dataset - see http://labs.europeana.eu/api/search#pagination// if we're just starting OR we've not fetched all records or reched the manually set limit AND we've got a cursor value for the next page

while ($records=='' OR ($records!=0 && $fetchedrecords<=$records && $fetchedrecords<=$limit) && $cursor!='') {

// set and fecth the API response

$apicall='http://www.europeana.eu/api/v2/search.json?wskey='.$apikey.'&query=photograph&qf=gaudin&qf=IMAGE_ASPECTRATIO%3ALandscape&qf=IMAGE_COLOUR%3Atrue&qf=IMAGE_SIZE%3Aextra_large&qf=PROVIDER%3ARijksmuseum&reusability=open&qf=TYPE%3AIMAGE&cursor='.$cursor.'&rows='.$rows.'&profile=rich';$json = file_get_contents($apicall);

$response = json_decode($json);

//print_r($response);

// check there are records then loop through each one, extracting metadata

$records = (isset($response->totalResults) ? $response->totalResults : 0);

echo $apicall.'<br>$totalResults: '.$response->totalResults.'<br>';

$fetchedrecords = $fetchedrecords + $rows;

$htmldata = '<hr>Page '.$fetchedrecords/$rows.'<br>';

// create the html to display results/progress to the user

$htmldata .='Fetching records: '.($fetchedrecords-$rows+1).' to '.$fetchedrecords.' of '.$records.'<br>';

foreach ($response->items as $record) {

$europeanaID=$record->id;// get the image file and save it

$imageURL=$record->edmIsShownBy[0];

$filename=str_replace("/","_",$europeanaID).'.jpg';

// $filename=basename($imageURL); // use this *only* if the dataset has unique filenames

$output = $directory.'/'.$filename;

file_put_contents($output, file_get_contents($imageURL));// add the record to the csv file

$csvdata = array($europeanaID,$imageURL,$filename);

if (file_exists($directory.'data.csv') && !is_writeable($directory.'data.csv')){

echo 'csv file write failed';

return false;

}

if ($datafile = fopen($directory.'data.csv', 'a')){

fputcsv($datafile, $csvdata);

fclose($datafile);

} else {

echo 'Failed to write metadata csv file';

}

// put in a small time delay to be nice to providers' servers

sleep(1);

}

// output the html to screen so user can see what's gong on!

echo $htmldata; echo '<hr>';

// get the cursor value from the response in order to fetch the next page of results

$cursor = urlencode($response->nextCursor);

}

?>